Fairness in Imaging with Deep Learning

We’ve all seen the moment in the movies—agents gather around a computer screen staring at a blurry image captured from a store security camera. With just a few clicks, magical ‘zoom and enhance’ software unblurs the fuzzy image, revealing the culprit’s license plate or the face of the villain.

It’s still science fiction, but the reality is that Deep Learning methods are getting closer to realizing this dream. Modern AI techniques can render high-quality images from blurry and low-resolution samples. These techniques can transform computational photography, medical imaging, increase resolution and accelerate MRI imaging and microscopy.

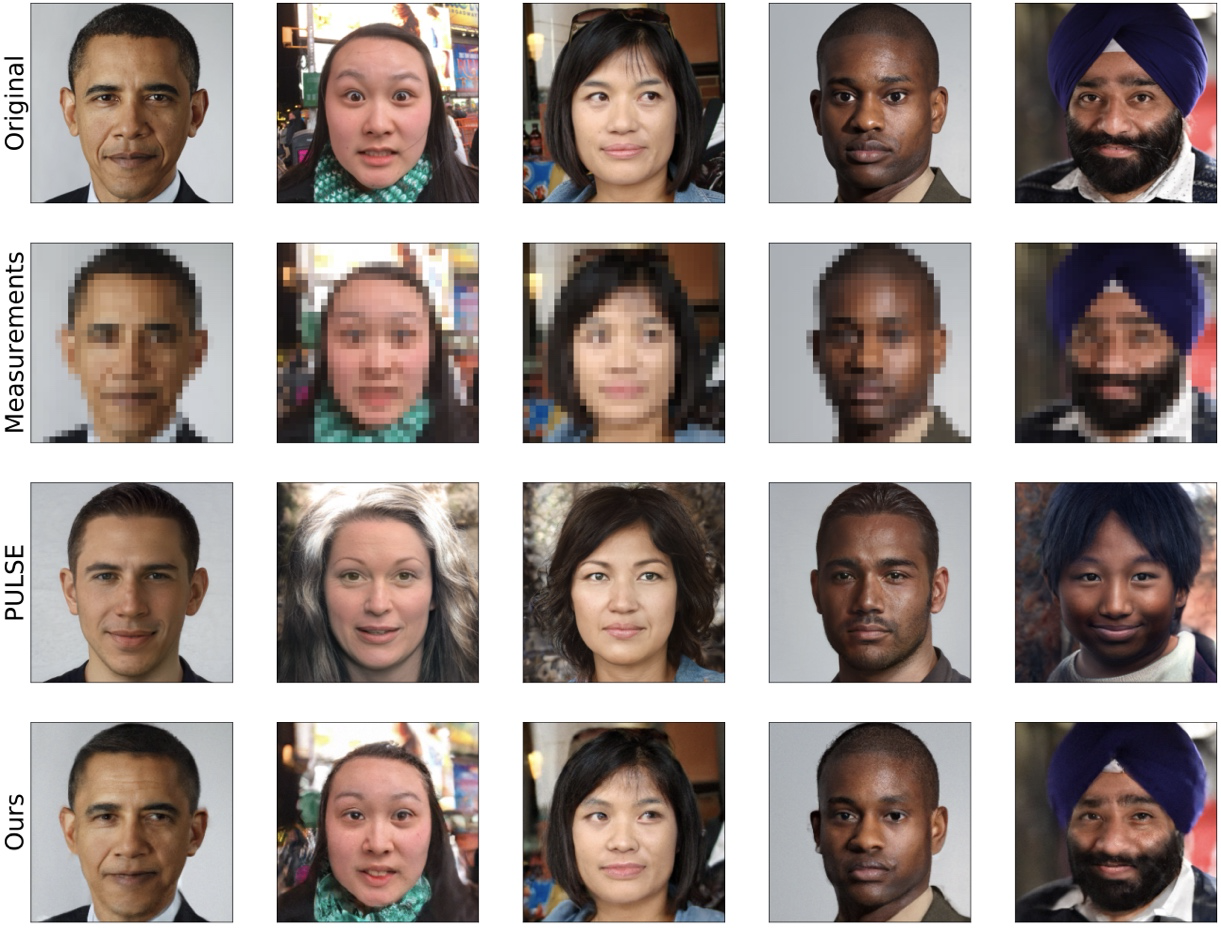

In the summer of 2020, a deep learning generative model with groundbreaking performance was posted on the web. The model could turn low-resolution images to high-quality photos. A user uploaded a low-resolution image of President Obama, to obtain an image that is now called ‘White Obama’, shown below. This is a startling display of bias in AI imaging algorithms: The deep learning model was reconstructing images with predominantly white features and a heated debate arose across Twitter and other social media: Was the source of the problem the bias in the training data, or in the reconstruction algorithm, and how could we fix it?

The algorithm that generated these images, PULSE, is using a deep generative model called StyleGAN that can produce artificial faces (like the ones shown in http://thispersondoesnotexist.com) but is optimizing the generated image to match the low-resolution input image after downscaling. StyleGAN was trained on a dataset of images of predominantly white people. Many researchers argued that this was the source of the bias, and that simply creating a balanced training dataset would solve the problem. Our recent work [1] shows that this is not the case: the reconstruction algorithm needs to be modified, in addition to the training set.

The first problem is that we need to think carefully about defining fairness in reconstructing images of people with various attributes. Traditional group fairness definitions are defined with respect to specified protected groups—camouflaging the fact that these groupings are artificial and carry historical and political motivations. For instance, should South and East Asians be viewed as a single group or separate groups? Should we consider one race as a whole or further split by gender? Choosing which groups are valid and who belongs in them is an impossible dilemma and being ‘‘fair’’ with respect to Asians may require being ‘‘unfair’’ with respect to South Asians. This motivates our introduction of oblivious fairness. The machine learning algorithm needs to work for all possible groupings of the population.

Our first result is that several intuitive notions of group fairness are incompatible and impossible to achieve simultaneously. We show that the natural extension of demographic parity is strongly dependent on the grouping, and impossible to achieve obliviously. We introduce a new definition of fairness called Conditional Proportional Representation which can be achieved obliviously (i.e. without defining specific protected groups) through a natural algorithm that we propose.

Our second result is that the reconstruction algorithm previously used (MAP inference) is amplifying any bias that can be present in the data. The essence of why is illustrated in the following toy example: Alice flips a biased coin that comes Heads with probability 0.6 and Tails with 0.4. Bob has to guess Heads or Tails, knowing this is a biased coin. If Bob wants to maximize his probability of winning, he will always guess Heads, even when the bias is only 60 percent. This increases the 60% dataset bias to 100%, and we observe this bias amplification phenomenon experimentally in our study. On the contrary, if Bob uses the algorithm we propose (posterior sampling), Bob will randomize his guess and propose Heads only 60 percent of the time, matching but not amplifying the bias in the training data.

Figure 2: An example of reconstructions using posterior sampling from [1] compared to the previous method PULSE which uses MAP inference and amplifies the bias.

As deep learning imaging algorithms become ubiquitous across smartphones, social networks, MRI scanners and a broad array of other applications, designing the correct metrics and understanding the foundations of deep learning is going to be key to ensure future deployments reflect the diverse and inclusive reality of our world.

References:

Fairness for Image Generation with Uncertain Sensitive Attributes

Ajil Jalal, Sushrut Karmalkar, Jessica Hoffmann, Alexandros G. Dimakis, Eric Price, International Conference on Machine Learning (ICML), 2021.